How AI Prototyping Tools Actually Work: A Deep Dive into Bolt's Architecture

Bolt and Lovable have hit $100M in revenue faster than almost any product in history. Here's what I discovered when I pulled apart Bolt's architecture.

A few weeks ago, I teamed up with

to do something I've been wanting to do for months: tear down one of the hottest AI products to understand what's really happening under the hood.As someone who builds tools for AI products at Arize, I'm constantly studying how the best teams implement AI. Bolt presented a perfect case study - simple on the surface, but hiding important lessons about building production AI. Here's what we found:

⚡️ Link here for the session!

Quick note: I'm launching my first course on AI Product Management this summer where we'll go much deeper into building production AI systems. We'll cover evals, agent architectures, and the practical skills you need to ship AI products that actually work.

The Surprising Simplicity

When Tal demonstrated Bolt by asking it to build a notification system for Riverside (a video recording platform), I expected to find complex orchestration, sophisticated agent frameworks, maybe some clever fine-tuning.

Instead, I found this:

Bolt is just a well-crafted prompt talking to an LLM that generates code and terminal commands as text.

That's it. No magic. But that simplicity is precisely why it's brilliant.

Breaking Down the Architecture

Let me walk you through exactly how Bolt transforms a prompt like "Build me a financial planner website" into working React code:

1. The System Prompt: Where Evolution Meets Engineering

I found Bolt's system prompt in their open-source repository. What struck me wasn't its sophistication - it was the obvious signs of evolution:

You are Bolt, an expert AI assistant and exceptional senior software developer...

[Basic context about web container environment]

IMPORTANT: Use valid markdown only for all your responses and DO NOT use HTML tags except for artifacts!

ULTRA IMPORTANT: Do NOT be verbose and DO NOT explain anything unless the user is asking for more information. That is VERY important.

ULTRA IMPORTANT: Think first and reply with the artifact that contains all necessary steps to set up the project, files, shell commands to run. It is SUPER IMPORTANT to respond with this first.

Those priority levels are what happens when you iterate based on real user pain points. That's what happens after thousands of users hit edge cases. Someone complained about verbose responses, so they added a rule.

2. Chain of Thought: The "Show Your Work" Pattern

Before generating any code, Bolt uses reasoning tokens. Here's the actual flow:

User Request → System Prompt → Reasoning → Context/RAG → Generated Code → DeployThe reasoning step is where Bolt plans its approach. It's literally thinking out loud: "I need to create a React component with state management for notifications. I'll use TypeScript for type safety. I'll need to handle both success and error states."

This isn't fancy. It's asking the LLM to explain its plan before executing. But the quality difference between "just generate code" and "think first, then generate code" is massive. This reasoning step is crucial. It's like the difference between asking a junior engineer to "just code something" versus asking them to first explain their approach. The quality difference is dramatic.

3. Implicit vs Explicit Tool Calling

Here's where things get interesting from an engineering perspective. Most production AI systems use explicit function calling - formally defined APIs the LLM can invoke.

They define APIs like:

@function

def create_file(path: str, content: str):

"""Creates a file at the specified path"""

@function

def run_command(cmd: str):

"""Executes a terminal command"""Bolt does something different. Everything is implicit:

<boltAction type="file" filePath="/src/App.tsx">

// File content here

</boltAction>

<boltAction type="command">

npm install react

</boltAction>The LLM generates these tags as part of its text output. A parser extracts them and executes the actions. It's simpler, more reliable, but also more limited. You can't call external APIs or access local files. That's the tradeoff.

Explicit Tools (what Cursor and advanced systems use):

Formal function definitions

Direct API access

External service integration

Implicit Tools (what Bolt uses):

Terminal commands are just text generation

File operations are structured text output

The web container parses and executes everything

Bolt is simpler and more reliable but limited in scope. It can't access external APIs or your local file system. That's not a bug - it's a feature that makes it safe and predictable.

4. The Web Container: Sandboxed Execution

Bolt runs everything in a WebAssembly container - essentially a browser-based sandbox. When you see code executing, it's running in:

Complete isolation from your system

No network access except through CORS

No persistent storage

Memory cleared between sessions

This is why Bolt feels safe to use. Even if someone tries prompt injection, the worst they can do is break their own session.

What's Missing (And Why It Matters)

No Evaluation System

During our session, Tal's demo failed with an import error. Bolt tried to import a package that didn't exist. The system didn't know why it failed - it just offered to "attempt fix."

Here's the eval that would have prevented this:

def test_import_validity(generated_code):

"""Check if all imports in generated code actually exist"""

imports = extract_imports(generated_code)

for imp in imports:

# Check against known packages in the environment

if imp not in AVAILABLE_PACKAGES:

return f"Invalid import: {imp}"

return "All imports valid"At Arize, every AI feature ships with evals. Without them, you have no idea if your prompt changes help or hurt. You're flying blind.

Starting Too Complex

Look at what Bolt's MVP didn't include:

What they skipped:

Multiple specialized agents ("UI agent", "backend agent", "database agent")

Memory between sessions

Custom fine-tuned models

External API integrations

Complex state management

What they focused on:

One good system prompt

Fast code generation

Instant preview

Reliable execution

This focus is why they could iterate quickly. Every feature they didn't build was a complexity they didn't have to debug.

The Improvement Loop in Action

Look at Bolt's prompt evolution again:

Users complain outputs are too long → Add "Do not be verbose"

Files get named wrong → Add "Follow naming conventions"

Still breaking → Escalate to "ULTRA IMPORTANT"

Each rule in their prompt is a lesson learned. This is the compound loop that makes AI products better:

Better Prompts → Better Outputs → More Users → More Edge Cases → Better Prompts

Without this loop, you ship once and stagnate.

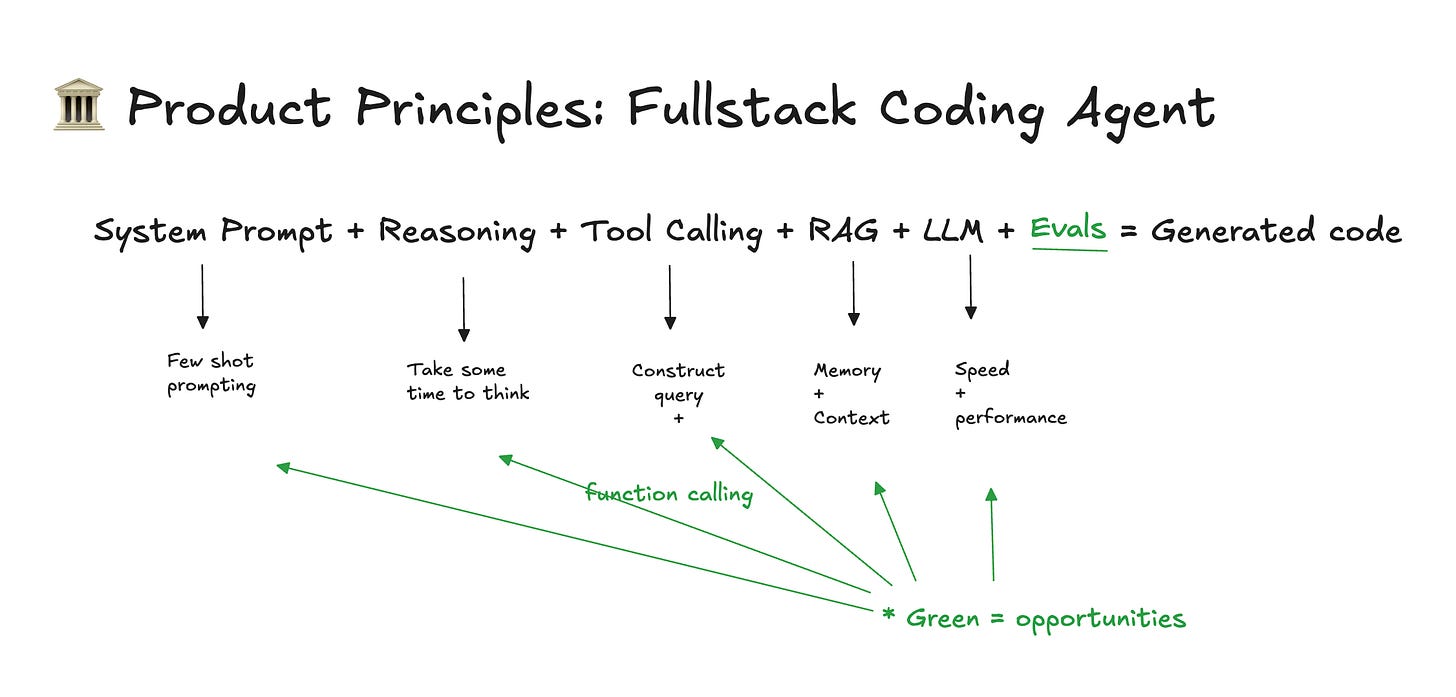

The Hidden Opportunities

For Builders:

Add explicit function calling: Connect to real APIs, databases, and services

Implement comprehensive evals: Catch errors before users do

Build domain-specific versions: Bolt for mobile apps, Bolt for data pipelines, Bolt for game development

For Product Teams:

Start with prototyping: Use Bolt to validate ideas before engineering builds them

Study the patterns: That notification system Tal built? Your product probably needs similar real-time features

Think beyond code: The same architecture works for generating designs, documents, workflows

My Challenge to You

Here's what I want you to do this week:

Try the same prompt in Bolt, Lovable, and v0. Notice how different architectures produce different results.

Read Bolt's system prompt. It's all public in their GitHub repo. What would you add or remove?

Build a simple eval. Pick one thing that could go wrong (like import errors) and write a check for it. This skill will become invaluable.

Bonus: How would an LLM as a judge eval work for this system? Hint: Can you evaluate the output from the product as a human?

Ask yourself: What repetitive task in your domain could be automated with this architecture?

The Real Lesson

Bolt hit $100M in revenue not by being the most sophisticated AI product, but by being the most focused. They found one thing - turning text into UI code - and executed it exceptionally well.

The magic isn't in the technology. It's in the discipline to keep things simple while solving a real problem.The best AI product is the one that ships and improves daily. Everything else is just theory.

Resources

Watch the full teardown session - See Tal and me work through this analysis live

My deep dive on evals - Why they're the most important skill for AI PMs

Bolt's architecture diagram - The visual breakdown of how it all fits together

Phoenix (open source) - Free tools for implementing evals in your products

What's Next?

I'm launching my first course on AI Product Management this summer where we'll go much deeper into building production AI systems. We'll cover evals, agent architectures, and the practical skills you need to ship AI products that actually work.

But more importantly - what will you build? The tools are all there. The patterns are proven. The only question is which problem you'll solve first.

Let me know what you're working on. I'm always excited to see how people apply these patterns in new domains.

Thanks to Tal Raviv for partnering on this analysis and for being an incredible co-host. Check out his course for AI for Product Managers if you want to level up your AI skills.

Have questions about building AI products? Find me on LinkedIn or X fka Twitter. I love talking shop with builders.