The Five Skills I Actually Use Every Day as an AI PM (And How You Can Too)

A conversation with Aakash Gupta on my actual workflow as an AI PM

Let me start with some honesty. When people ask me "Should I become an AI PM?", I tell them they're asking the wrong question.

Here's what I've learned: becoming an AI PM isn't about chasing a trendy job title. It's about developing concrete skills that make you more effective at building products in a world where AI touches everything.

Every PM is becoming an AI PM, whether they realize it or not. Your payment flow will have fraud detection. Your search bar will have semantic understanding. Your customer support will have chatbots.

Think of AI Product Managements as less of an OR and instead more of an AND

For example: AI x HealthTech PM, or AI x Fintech PM

The Five Skills I Actually Use Every Day

After ~9 years of building AI products (the last 3 of which have been a complete ramp up using LLMs and agents), here are the skills I use constantly—not the ones that sound good in a blog post, but the ones I literally used yesterday.

AI prototyping

Observability, Akin to Telemetry

AI Evals: The New PRD for AI PMs

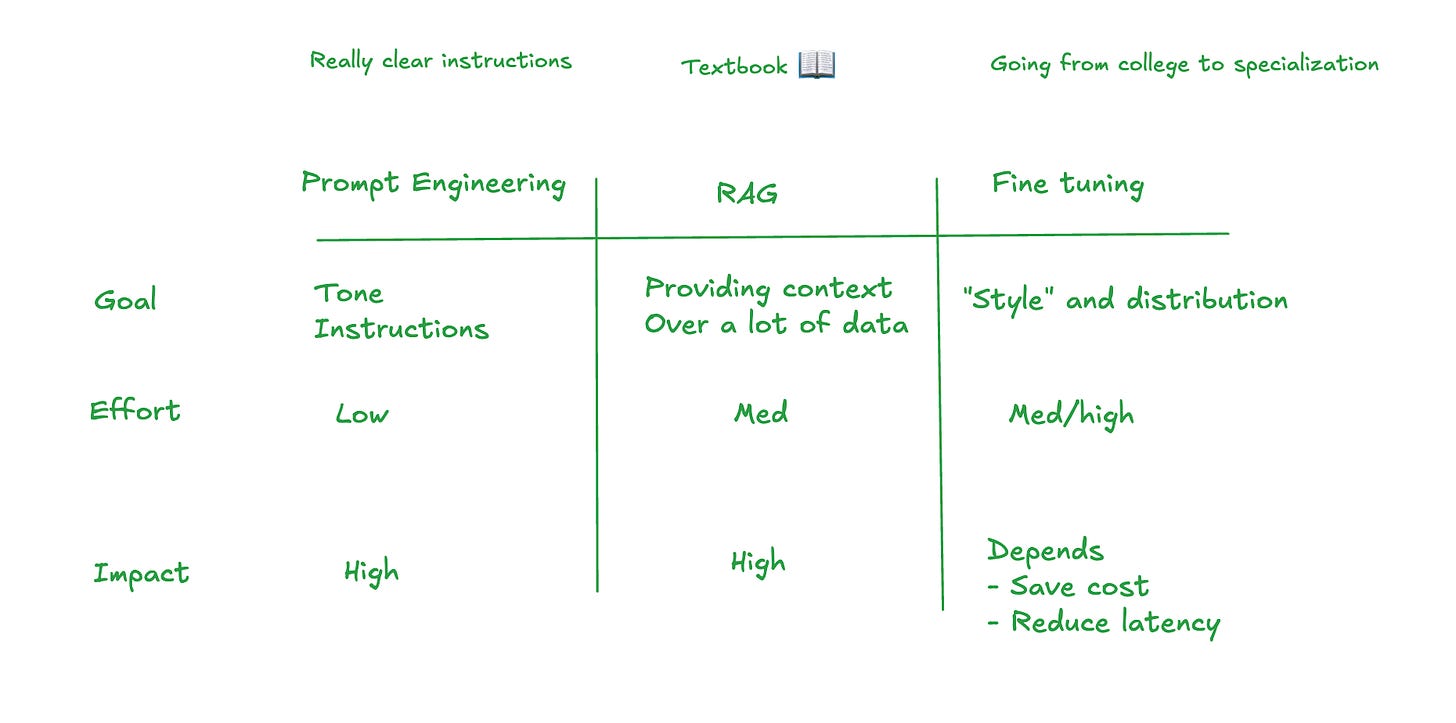

RAG v Fine-Tuning v Prompt Engineering

Working with AI Engineers

1. Prototyping: Why I Code Every Week

Last month, our design team spent two weeks creating beautiful mocks for an AI agent interface. It looked perfect. Then I spent 30 minutes in Cursor building a functional prototype, and we immediately discovered three fundamental UX problems the mocks hadn't revealed.

The skill: Using AI-powered coding tools to build rough prototypes The tool: Cursor (it's VS Code but you can describe what you want in plain English) Why it matters: AI behavior is impossible to understand from static mocks

How to start this week:

Download Cursor

Build something stupidly simple (I started with a personal website landing page)

Show it to an engineer and ask what you did wrong

Repeat

You're not trying to become an engineer. You're trying to understand constraints and possibilities.

2. Observability: Debugging the Black Box

Arize docs on AI Product Manager Resources - more coming here!

Observability is how you actually peek underneath the hood and see how your agent is working.

The skill: Using traces to understand what your AI actually did

The tool: Any APM that supports LLM tracing (we use our own at Arize, but there are many)

Why it matters: "The AI is broken" is not actionable. "The context retrieval returned the wrong document" is.

Your first observability exercise:

Pick any AI product you use daily

Try to trigger an edge case or error

Write down what you think went wrong internally

This mental model building is 80% of the skill

3. Evaluations: Your New Definition of "Done"

If you haven’t checked it out yet, this is a primer on Evals I worked with Lenny on

Vibe coding works if you’re shipping prototypes. It doesn’t really work if you’re shipping production code.

The skill: Turning subjective quality into measurable metrics

The tool: Start with spreadsheets, graduate to proper eval frameworks

Why it matters: You can't improve what you can't measure

Build your first eval:

Pick one quality dimension (conciseness, friendliness, accuracy)

Create 20 examples of good and bad

Labeled them: "verbose” or “concise”

Score your current system

Set a target: 85% of responses should be "just right"

That number is now your new north star

Iterate until you hit it

4. Technical Intuition: Knowing Your Options

Prompt engineering (1 day): Add brand voice guidelines to the system prompt

Few-shot examples (3 days): Include examples of on-brand responses

RAG with style guide (1 week): Pull from our actual brand documentation

Fine-tuning (1 month): Train a model on our support transcripts

Each has different costs, timelines, and tradeoffs. My job is knowing which to recommend.

Building intuition without building models:

When you see an AI feature you like, write down three ways they might have built it

Ask an AI engineer if you're right

Wrong guesses teach you more than right ones

5. The New PM-Engineer Partnership

The biggest shift? How I work with engineers.

Old way:

I write requirements

They build it

We test it

Ship

New way:

We label training data together

We define success metrics together

We debug failures together

We own outcomes together

Last month, I spent two hours with an engineer labeling whether responses were "helpful" or not. We disagreed on a lot of them. This taught me that I need to start collaborating on evals with my AI Engineers.

I’ll have more on this topic coming 🔜 in an upcoming post

Start collaborating differently:

Next feature: ask to join a model evaluation session

Offer to help label test data

Share customer feedback in terms of eval metrics

Celebrate eval improvements like you used to celebrate feature launches

Your Four-Week Transition Plan

Week 1: Tool Setup

Install Cursor

Get access to your company's LLM playground

Find where your AI logs/traces live

Build one tiny prototype (took me 3 hours to build my first)

Week 2: Observation

Trace 5 AI interactions in products you use

Document what you think happened vs. what actually happened

Share findings with an AI engineer for feedback

Week 3: Measurement

Create your first 20-example eval set

Score an existing feature

Propose one improvement based on the scores

Week 4: Collaboration

Join an engineering model review

Volunteer to label 50 examples

Frame your next feature request as eval criteria

Week 5: Iteration

Take your learnings from prototyping and build these learnings into a production proposal

Set the bar with Evals

Use your AI Intuition for iteration - which knobs should you turn?

The Uncomfortable Truth

Here's what I wish someone had told me three years ago: You will feel like a beginner again. After years of being the expert in the room, you'll be the person asking basic questions.

That's exactly where you need to be.

The PMs who succeed in AI are the ones who are comfortable being uncomfortable. They're the ones who build bad prototypes, ask "dumb" questions, and treat every confusing model output as a learning opportunity.

Start This Week

Don't wait for the perfect course, the ideal role, or for AI to "stabilize." The skills you need are practical, learnable, and immediately applicable.

Pick one thing from this post. Just one. Do it this week. Then tell someone what you learned.

The gap between PMs who talk about AI and PMs who build with AI is smaller than you think. It's measured in hours of hands-on practice, not years of study.

See you on the other side.

Resources

Full Post Below with more from 👇🏽

My Maven course: "Prototype to Production: The AI PM Playbook" (use code 'AAKASHxMAVEN' for $100 off). First time running a cohort, think of this as “personal training” to learn hands on more about the skills we talk about in this post.